Change Data Capture: What It Is and How to Use It

Jump To

What Is Change Data Capture?

Change data capture (CDC) is the process of recognising when data has been changed in a source system so a downstream process or system can action that change. A common use case is to reflect the change in a different target system so that the data in the systems stay in sync.

There are many ways to implement a change data capture system, each of which has its benefits. This post will explain some common CDC implementations and discuss the benefits and drawbacks of using each. This post is useful for anyone who wishes to implement a change data capture system, especially in the context of keeping data in sync between two systems.

Push vs Pull

There are two main ways for change data capture systems to operate. Either the source system pushes changes to the target, or the target periodically polls the source and pulls the changed data.

Push-based systems often require more work for the source system, as they need to implement a solution that understands when changes are made and send those changes in a way that the target can receive and action them. The target system simply needs to listen out for changes and apply them instead of constantly polling the source and keeping track of what it's already captured. This approach often leads to lower latency between the source and target because as soon as the change is made the target is notified and can action it immediately, instead of polling for changes.

The downside of the push-based approach is that if the target system is down or not listening for changes for whatever reason, they will miss changes. To mitigate this, queue- based systems are implemented in between the source and the target so that the source can post changes to the queue and the target reads from the queue at its own pace. If the target needs to stop listening to the queue, as long as it remembers where it was in the queue it can stop and restart where it left off without missing any changes.

Pull-based systems are often a lot simpler for the source system as they often require logging that a change has happened, usually by updating a column on the table. The target system is then responsible for pulling the changed data by requesting anything that it believes has changed.

The benefit of this is the same as the queue-based approach mentioned previously, in that if the target ever encounters an issue, because it's keeping track of what it's already pulled, it can restart and pick up where it left off without any issues.

The downside of the pull approach is that it often increases latency. This is because the target has to poll the source system for updates rather than being told when something has changed. This often leads to data being pulled in batches anywhere from large batches pulled once a day to lots of small batches pulled frequently.

The rule of thumb is that if you are looking to build a real-time data processing system then the push approach should be used. If latency isn’t a big issue and you need to transfer a high volume of bulk updates, then pull-based systems should be considered.

The next section will cover the positives and negatives of a number of different CDC mechanisms that utilise the push or pull approach.

Change Data Capture Mechanisms

There are many ways to implement a change data capture system. Most patterns require the source system to flag that a change has happened to some data, for example by updating a specific column on a table in the database or putting the changed record onto a queue. The target system then has to either watch for the update on the column and fetch the changed record or subscribe to the queue.

Once the target system has the changed data it then needs to reflect that in its system. This could be as simple as applying an update to a record in the target database. This section will break down some of the most commonly used patterns. All of the mechanisms work similarly; it’s how you implement them that changes.

Row Versioning

Row versioning is a common CDC pattern. It works by incrementing a version number on the row in a database when it is changed. Let’s say you have a database that stores customer data. Every time a record for a customer is either created or updated in the customer table, a version column is incremented. The version column just stores the version number for that record telling you how many times it’s changed.

It is popular because not only can it be used to tell a target system that a record has been updated, it also lets you know how many times that record has changed in the past. This may be useful information in certain use cases.

It’s most common to start the version number off from 0 or 1 when the record is created and then increment this number any time a change is made to the record.

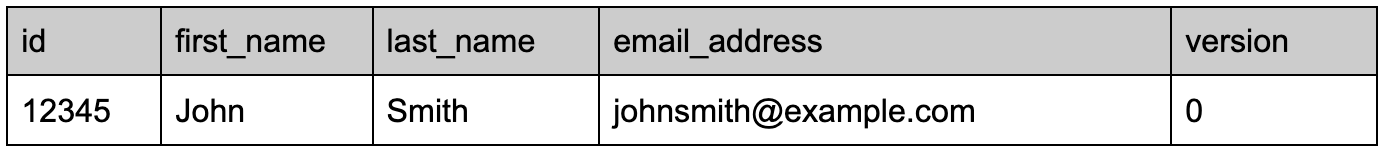

For example, a customer record storing the customer’s name and email address is created and starts with a version number of 0.

At a later date, the customer changes their email address, this would then increment the version number by 1. The record in the database would now look as follows.

For the source system, this implementation is fairly straight forward. Some databases like SQL Server have this functionality built in; others require database triggers to increment the number any time a modification is made to the record.

The complexity with the row versioning CDC pattern is actually in the target system. This is because each record can have different version numbers so you need a way to understand what its current version number is and then if it has changed.

This is often done using reference tables that for each ID, stores the last known version for that record. The target then checks if any rows have a version number greater than that stored in the reference table. If they do then these records are captured and the changes reflected in the target system. The reference table then also needs updating to reflect the new version number for these records.

As you can see, there is a bit of an overhead in this solution but depending on your use case it might be worth it. A simpler version of this approach is covered next.

Update Timestamps

In my experience, update timestamps are the most common and simplest CDC mechanisms to implement. Similar to the row versioning solution, every time a record in the database changes you update a column. Instead of this column storing the version number of the record, it stores a timestamp of when the record was changed.

With this solution, you lose a bit of extra data as you no longer know how many times the record has been changed, but if this isn’t important then the downstream benefits are worth it.

When a record is first created, the update timestamp column is set to the date and time that the record was inserted. Every subsequent update then overwrites that timestamp with the current one, again depending on the database technology you are using this may be taken care of for you, you could use a database trigger or build this into your application logic.

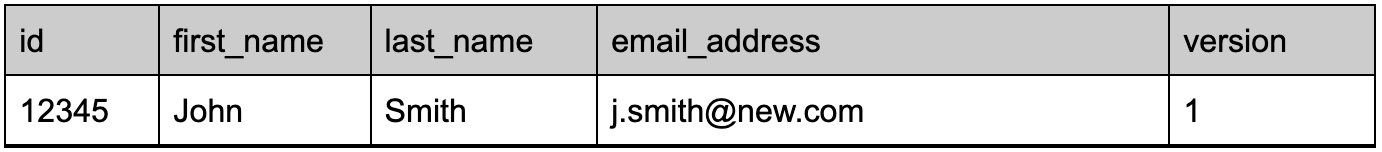

When the record is created the update timestamp is set.

If the record is modified, the update timestamp is set to the latest date and time.

The benefit of timestamps especially over row versioning is that the target system no longer has to keep a reference table. The target system can now just request any records from the source system that have an update timestamp greater than the latest one they have in their system.

This is much less overhead for the target system as it doesn’t have to keep track of every record’s version number. It can simply poll the source based on the maximum update timestamp it has and therefore will always pick up any new or changed records.

Publish and Subscribe Queues

The publish and subscribe (pub/sub) pattern is the first pattern that uses a push rather than pull approach. The row versioning and update timestamp solutions all require the target system to “pull” the data that has changed, in a pub/sub model the source system pushes the changed data.

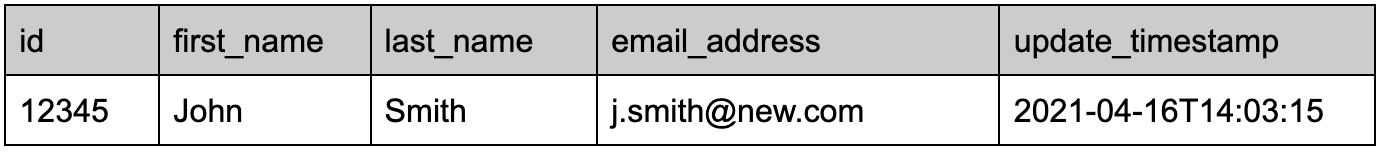

Normally, this solution requires a middle man that sits in between the source and the target as shown in Fig 1. Any time a change is made to the data in the source system, the source pushes the change to the queue. The target system is listening to the queue and can then consume the changes as they arrive. Again, this solution requires less overhead for the target system as it simply has to listen for changes and apply them as they arrive.

Fig 1. Queue-based publish and subscribe CDC approach

This solution provides a number of benefits, the main one being scalability. If during a period of high load the source system is updating thousands of records in a matter of seconds, the “pull” approaches will have to pull large amounts of changes from the source at a time and apply them all. This inevitably takes longer and will therefore increase the lag before they request new data and the lag time from the source changing to the target updating becomes larger. The pub/sub approach allows the source to send as many updates as it likes to the queue and the target system can scale the number of consumers of this queue accordingly to process the data quicker if necessary.

The second benefit is that the two systems are now decoupled. If the source system wants to change its underlying database or move the particular dataset elsewhere, the target doesn’t need to change as it would with a pull system. As long as the source system keeps pushing messages to the queue in the same format, the target can continue receiving updates blissfully unaware that the source system has changed anything.

Database Log Scanners

This method involves configuring the source database system so that it logs any modifications made on the data within the database. Most modern database technologies have something like this built in. It is fairly common practice to have replica databases for a number of reasons, including backups or offloading large processing from the main database. These replica databases are kept in sync by using these logs. When a modification is made on the master it records the statement in the log and the replica executes the same command and the two stay in sync.

If you wanted to sync data to a different database technology instead of replicating, you could still use these logs and translate them into commands to be executed on the target system. The source system would log any INSERT, UPDATE or DELETE statements that are run and the target system just translates and replicates them in the same order. This solution can be useful especially if you don’t want to change the source schema to add update timestamp columns or something similar.

There are a number of challenges with this approach. Each database technology manages these change log files differently.

- The files normally only exist for a certain period of time before being archived so if the target ever encounters an issue there is a fixed amount of time to catch up before losing access to the logs in their usual location.

- Translating the commands from source to target can be tricky especially if you’re capturing changes to a SQL database and reflecting them in a NoSQL database, as the way commands are written are different.

- The system needs to deal with transactional systems where changes are only applied on commit. So if changes are made and rolled back, the target needs to reflect the rollback too.

Change Scanning

Change scanning is similar to the row versioning technique but is usually employed on file systems rather than on databases. Similar to the row versioning method, change scanning involves scanning a filesystem, usually in a specific directory, for data files. These files could be something like CSV files and are captured and often converted into data to be stored in a target system.

Along with the data, the path of the file and the source system it was captured from is also stored. The CDC system then periodically polls the source file system to check for any new files using the file metadata it stored earlier as a reference. Any new files are then captured and their metadata stored too.

This solution is typically used for systems that output data to files, these files could contain new records but also updates to existing records again allowing the target system to stay in sync. The downside of this approach is that the latency between changes being made in the source and reflected in the target is often a lot higher. This is because the source system will often batch changes up before writing them to a file to prevent writing lots of very small files.

A Common CDC Architecture with Debezium

There are a number of technologies available that provide slick CDC implementations depending on your use case. The technology world is becoming more and more real time and therefore solutions that allow changes to be captured in real time are becoming more popular. One of the leading technologies in this space is Debezium. It’s goal is to simplify change data capture from databases in a scaleable way.

The reason Debezium has become so popular is that it can provide the real-time latency of a push-based system with often minimal changes to the source system. Debezium monitors database logs to identify changes and pushes these changes onto a queue so that they can be consumed. Often the only change the source database needs to make is a configuration change to ensure its database logs include the right level of detail for Debezium to capture the changes.

Fig 2. Reference Debezium Architecture

To handle the queuing of changes, Debezium uses Kafka. This allows the architecture to scale for large throughput systems and also decouples the target system as mentioned in the Push vs Pull section. The downside is that to use Debezium you also have to deploy a Kafka cluster so this should be weighed up when assessing your use case.

The upside is that Debezium will take care of monitoring changes to the source database and provide them in a timely manner. It doesn’t increase CPU usage in the source database system like pull systems would, as it uses the database log files. Debezium also requires no change to source schemas to add update timestamp columns and it can also capture deletes, something that “update timestamp” based implementations find difficult. These features often outweigh the cost of implementing a Debezium and a Kafka cluster and is why this is one of the most popular CDC solutions.

CDC at Rockset

Rockset is a real-time analytics database that employs a number of these change data capture systems to ingest data. Rockset’s main use case is to enable real-time analytics and therefore most of the CDC methods it uses are push based. This enables changes to be captured in Rockset as quickly as possible so analytical results are as up to date as possible.

The main challenge with any new data platform is the movement of data between the existing source system and the new target system, and Rockset simplifies this by providing built-in connectors that leverage some of these CDC implementations for a number of popular technologies.

These CDC implementations are offered in the form of configurable connectors for systems such as MongoDB, DynamoDB, MySQL, Postgres and others. If you have data coming from one of these supported sources and you are using Rockset for real-time analytics, the built-in connectors offer the simplest CDC solution, without requiring separately managed Debezium and Kafka components.

As a mutable database, Rockset allows any existing record, including individual fields of an existing deeply nested document, to be updated without having to reindex the entire document. This is especially useful and very efficient when staying in sync with OLTP databases, which are likely to have a high rate of inserts, updates and deletes.

These connectors abstract the complexity of the CDC implementation up so that developers only need to provide basic configuration; Rockset then takes care of keeping that data in sync with the source system. For most of the supported data sources the latency between the source and target is under 5 seconds.

Publish/Subscribe Sources The Rockset connectors that utilise the publish subscribe CDC method are:

- DynamoDB

- MongoDB

- MySQL

- Postgres

- Kafka

- Amazon Kinesis

Rockset utilises the inbuilt change stream technologies available in each of the databases (excluding Kafka and Kinesis) that push any changes allowing Rockset to listen for these changes and apply them in its database. Kafka and Kinesis are already data queue/stream systems, so in this instance, Rockset listens to these services and it’s up to the source application to push the changes.

Change Scanning

Rockset also includes a change scanning CDC approach for file-based sources including:

- Amazon S3

- Google Cloud Storage (GCS)

Including a data source that uses this CDC approach increases the flexibility of Rockset. Regardless of what source technology you have, if you can write data out to flat files in S3 or GCS then you can utilise Rockset for your analytics.

Which CDC Method Should I Use?

There is no right or wrong method to use. This post has discussed many of the positives and negatives of each method and each have their use cases. It all depends on the requirements for capturing changes and what the data in the target system will be used for.

If the use cases for the target system are dependent on the data being up to date at all times then you should definitely look to implement a push-based CDC solution. Even if your use cases right now aren’t real-time based, you may still want to consider this approach versus the overhead of managing a pull-based system.

If a push-based CDC solution isn’t possible then pull-based solutions are dependent on a number of factors. Firstly, if you can modify the source schema then adding update timestamps or row versions should be fairly trivial by creating some database triggers. The overhead of managing an update timestamp system is much less than a row versioning system, so using update timestamps should be preferred where possible.

If modifying the source system isn’t possible then your only options are: utilising any in-built change log capabilities of the source database or change scanning. If change scanning can’t be accommodated by the source system providing data in files, then a change scanning approach at a table level will be required. This would mean pulling all of the data in the table each time and figuring out what has changed by comparing it to what is stored in the target. This an expensive approach and only realistic in source systems with relatively small datasets so should be used as a last resort.

Finally, a DIY CDC implementation isn’t always easy, so using readymade CDC options such as the Debezium and Kafka combination or Rockset’s built-in connectors for real-time analytics use cases are good alternatives in many instances.